Comprehensive and Detailed in-Depth Explanation:

Understanding Vulnerability Scanning Types:

Vulnerability scans can be categorized by two major factors:

Active vs. Passive:

Active Scans:Actively probe the target system, gathering detailed data.

Passive Scans:Monitor network traffic and analyze data without directly probing the system.

Credentialed vs. Non-Credentialed:

Credentialed Scans:Utilize valid user credentials to access system information.

Non-Credentialed Scans:Rely on publicly accessible data and network probing.

Why the Correct Answer is D (An active, credentialed scan):

Anactive, credentialed scancombinesdirect system probingwithauthorized access.

This scan type provides themost accurate and comprehensive data, including:

Configuration settings:Verifies security configurations likefirewall rules, user privileges, and patch levels.

Detailed vulnerability data:Can accessinternal system informationandlog files.

Thorough system analysis:Can enumeratesoftware versions, system settings, and misconfigurations.

Credentialed scans canaccess local configuration filesandOS-level data, giving afull pictureof the system's security posture.

Example Scenario:

A systems administrator wants to verify thatall systems are patchedand properly configured according tocompany policies.

Anactive, credentialed scancan log into each system anddirectly check patch versionsandconfiguration files, while anon-credentialed scanwould only be able to detect open ports and basic system information.

Why the Other Options Are Incorrect:

A. A passive, credentialed scan:

Passive scansdo not interact directlywith the system, even if credentials are available.

Theylack accuracyin identifyingconfiguration settingssince they onlymonitor traffic.

B. A passive, non-credentialed scan:

This method is theleast accurateas it onlymonitors network trafficwithout logging into systems.

Providessurface-level informationand is typically used fordetecting anomaliesrather than deep scanning.

C. An active, non-credentialed scan:

Whileactive scanningprovides some detail,non-credentialed scansare limited toexternal checks.

They can detectopen ports and basic vulnerabilitiesbutcannot access internal configurationsor system files.

Key Differences:

Scan Type

Accuracy for Configuration Data

Intrusiveness

Use Case

Passive, Credentialed

Low

Low

Network monitoring

Passive, Non-Credentialed

Very Low

Low

Anomaly detection

Active, Non-Credentialed

Medium

High

Initial vulnerability assessment

Active, Credentialed

High

High

Comprehensive vulnerability analysis

Best Practice:

Always useactive, credentialed scansforin-depth vulnerability assessment, especially when accuracy inconfiguration validationis essential.

Ensure thatcredentials providedfor scanning haveread-only permissionsto minimize risk.

Extract from CompTIA SecurityX CAS-005 Study Guide:

TheCompTIA SecurityX CAS-005 Official Study Guidehighlights thatactive, credentialed vulnerability scansare the most thorough and accurate. These scans canaccess system configurations, file permissions, and patch levels, which are crucial formaintaining system security. Non-credentialed scans, while useful forsurface-level checks, do not provide the same level of insight.

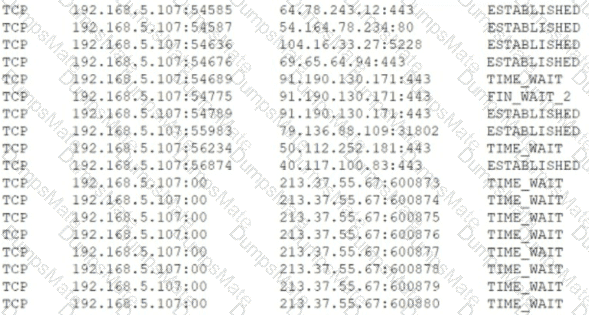

Which of the following is MOST likely happening to the server?

Which of the following is MOST likely happening to the server?