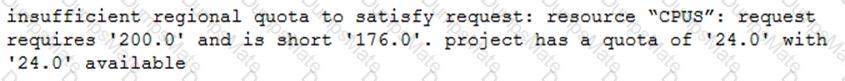

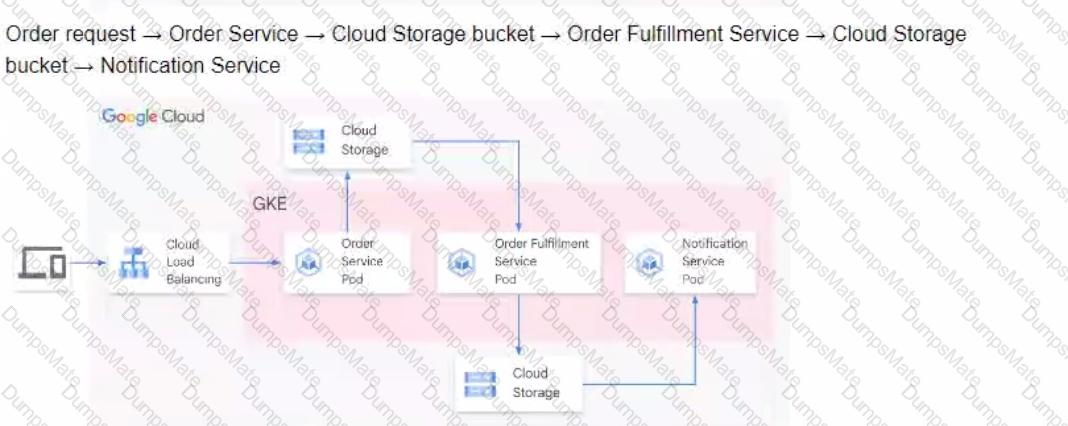

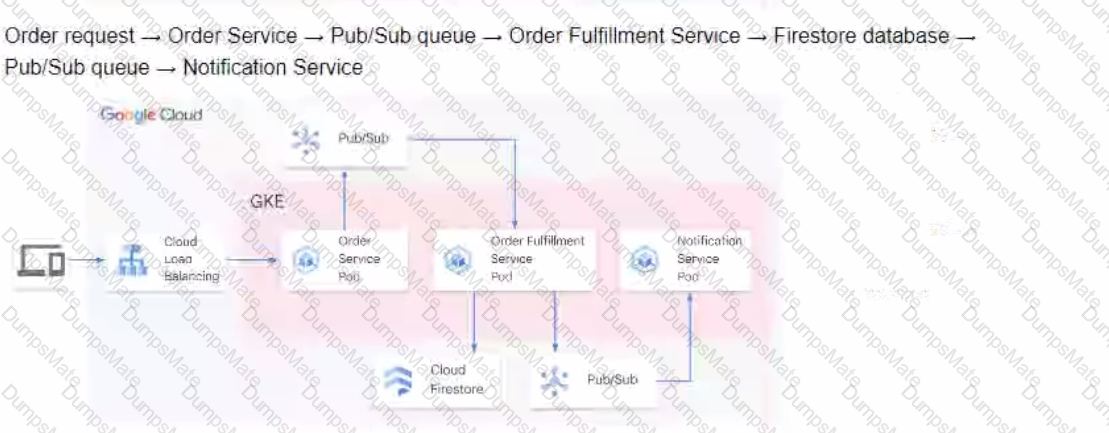

You are developing a flower ordering application Currently you have three microservices.

• Order Service (receives the orders).

• Order Fulfillment Service (processes the orders).

• Notification Service (notifies the customer when the order is filled).

You need to determine how the services will communicate with each other. You want incoming orders to be processed quickly and you need to collect order information for fulfillment. You also want to make sure orders are not lost between your services and are able to communicate asynchronously. How should the requests be processed?